For the last few years, PLM community and vendors got used to the word “cloud”. You can see that every vendor has cloud PLM or cloud-ready or cloud-enabled solution. However, cloud seems to be an old news. I’ve got an email from TechTarget telling me that the “word of today” is multi-cloud. Check the article here.

There is no single multi-cloud infrastructure vendor. Instead, a multi-cloud strategy typically involves a mix of the major public cloud providers, such as Amazon Web Services (AWS), Google, Microsoft and IBM.

Multi-cloud computing, as noted above, commonly refers to the use of multiple public cloud providers, and is more of a general approach to managing and paying for cloud services in the way that seems best for a given organization.

There are several commonly cited advantages to multi-cloud computing, such as the ability to avoid vendor lock-in, the ability to find the optimal cloud service for a particular business or technical need, increased redundancy, and the other beneficial uses mentioned above.

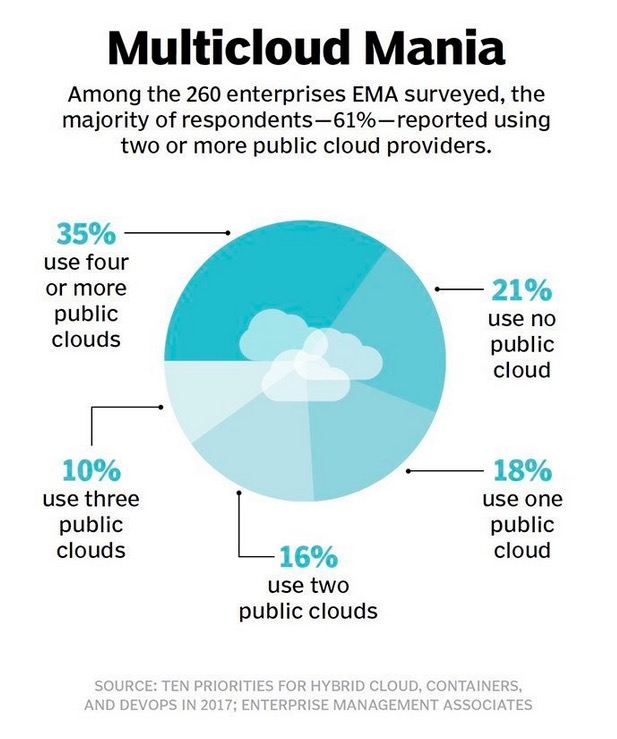

The same article suggests some stats.

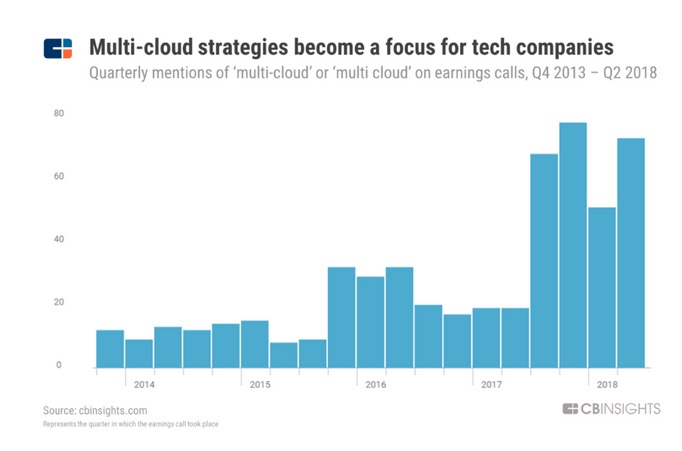

I found some interesting data points and information about multi-cloud strategies in the following CBInsight article – Here’s Why Amazon Is No Shoo-In To Win The $513B Global Cloud Market. Read the article and you will find some interesting facts and technological perspective.

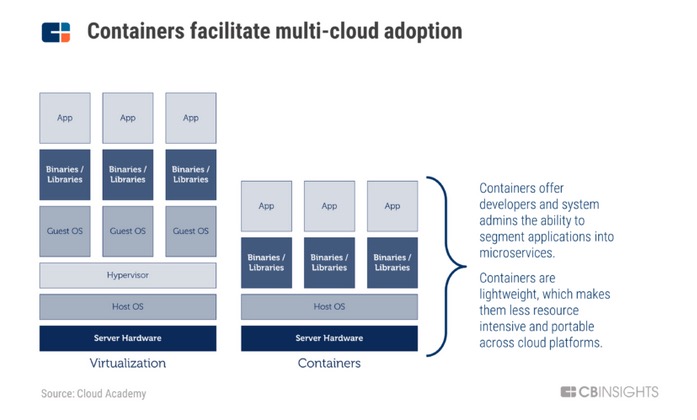

Here is the one thing that in my view is important for PLM vendors – containers facilitate multi-cloud adoption. Remember – why PLM vendors should learn about devops and kubernetes?

Containers communicate using application programming interfaces (APIs). APIs allow microservices to work together to operate a complete application, no matter where they are located (even across multiple cloud providers). When an application needs to be updated, developers only need to edit the individual microservice. There is no longer the need to update an entire application. For example, if the retailer above wants to edit the store locator function on its app, it can do so without updating the entire app itself. But while the introduction of microservices and containers have improved software development, deployment, and run time, they have also introduced additional complexity — there are more components operating in more environments.

It reminded me the discussion I had on my blog about microservices and PLM architecture – What PLM systems are actually supporting micro-service architecture. As companies will be moving to multi-cloud environment, the question about PLM architectures will become more critical. Somehow, the puzzle of existing systems, new PLM implementations and multiple companies working together will have to come together. It is a combination of functional requirements and cost effectiveness.

What is my conclusion? PLM vendors are making certification for multiple cloud infrastructure. This is a natural beginning of multi-cloud strategies. You can get most of PLM software today hosted on AWS and some of these products on MS Azure cloud. My hunch GCP is less of priority, but this is not a point. While you can get any specific PLM system on one of IaaS hosting services, the question of how these online services can be intertwined to operate together is not clear to me. Most of PLM systems are designed as a single database architecture combined with flexible data model. The granularity of services can become a bottleneck for old PLM systems to support multi-cloud strategies. While sounds like a complete technical aspect, it can have long going impact on architecture, cost and business models of future PLM cloud services. Just my thoughts…

Best, Oleg

Disclaimer: I’m co-founder and CEO of OpenBOM developing cloud based bill of materials and inventory management tool for manufacturing companies, hardware startups and supply chain. My opinion can be unintentionally biased.

The post PLM multi-clouds appeared first on Beyond PLM (Product Lifecycle Management) Blog.

Be the first to post a comment.